Haoming WangI am a PhD student at the Department of Electrical and Computer Engineering at University of Pittsburgh. I am current working in Intelligent System Lab, supervised by Dr. Wei Gao. I previously earned my bachelor’s degree in Automation from the Department of Control Science and Engineering at Zhejiang University. My research focuses on on-device AI. I am also interested in Spatial intelligence and explainable AI. I am on track to graduate in 2027 and am actively seeking internship opportunities for the summer of 2026. I welcome any inquiries to connect. Email / CV / Google Scholar / LinkedIn / Social Media |

|

Recent Projects

|

|

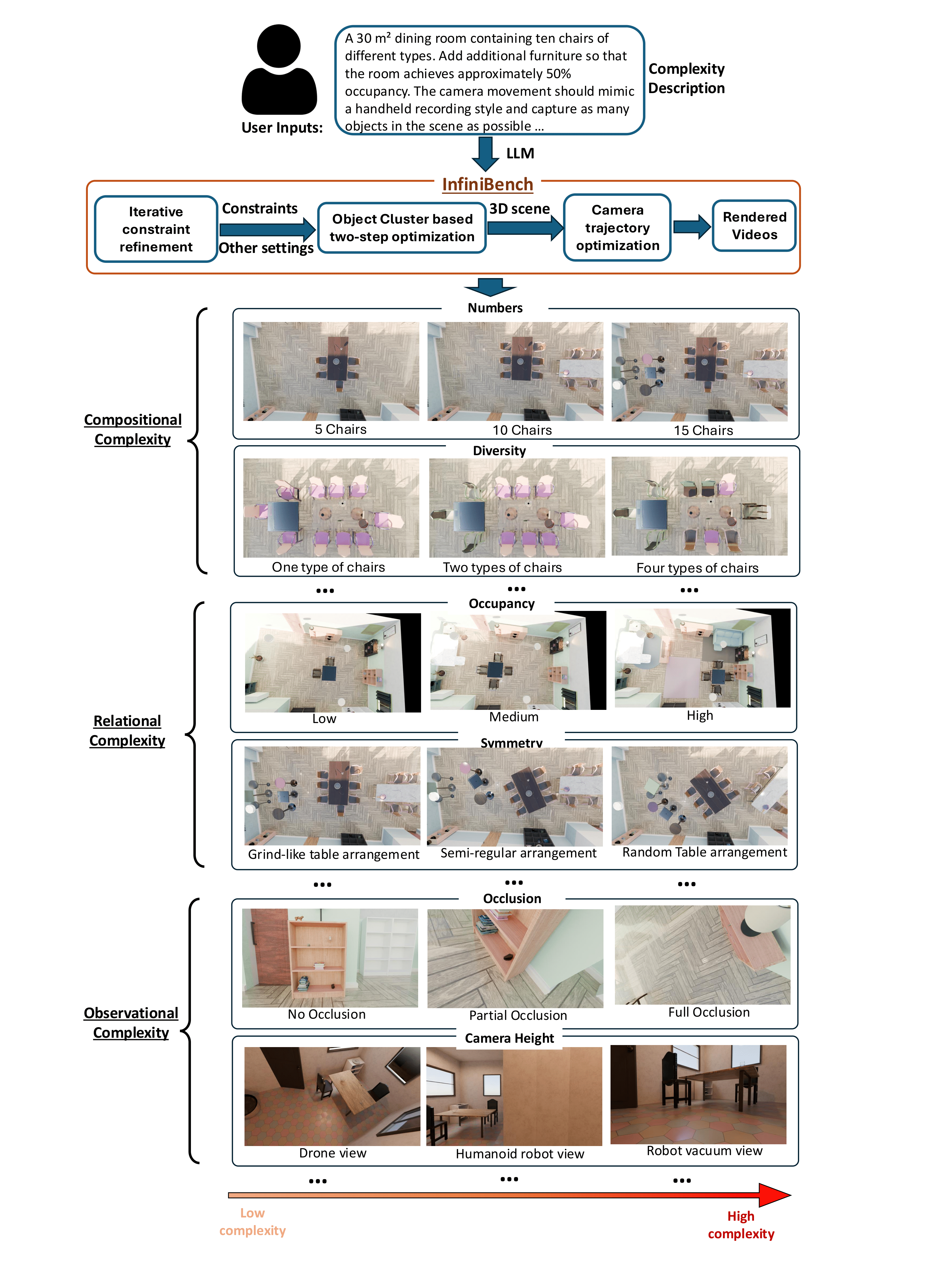

InfiniBench: Infinite Benchmarking for Visual Spatial Reasoning with Customizable Scene ComplexityHaoming Wang,Qiyao Xue, Wei Gao , 2025 paper / Modern VLMs require robust spatial-reasoning evaluation, but existing benchmarks lack diversity, scalability, and fine-grained control over scene complexity. To address this, we introduce InfiniBench, a fully automated and customizable benchmark generator capable of producing an unlimited variety of photo-realistic 3D scenes and videos from natural-language descriptions. Through its agentic constraint-refinement framework, cluster-based layout optimizer, and task-aware camera trajectory design, InfiniBench enables precise analysis of VLM failure modes and outperforms prior 3D generation methods, while supporting diverse spatial-reasoning tasks such as measurement, perspective-taking, and spatiotemporal tracking. |

Publications

|

|

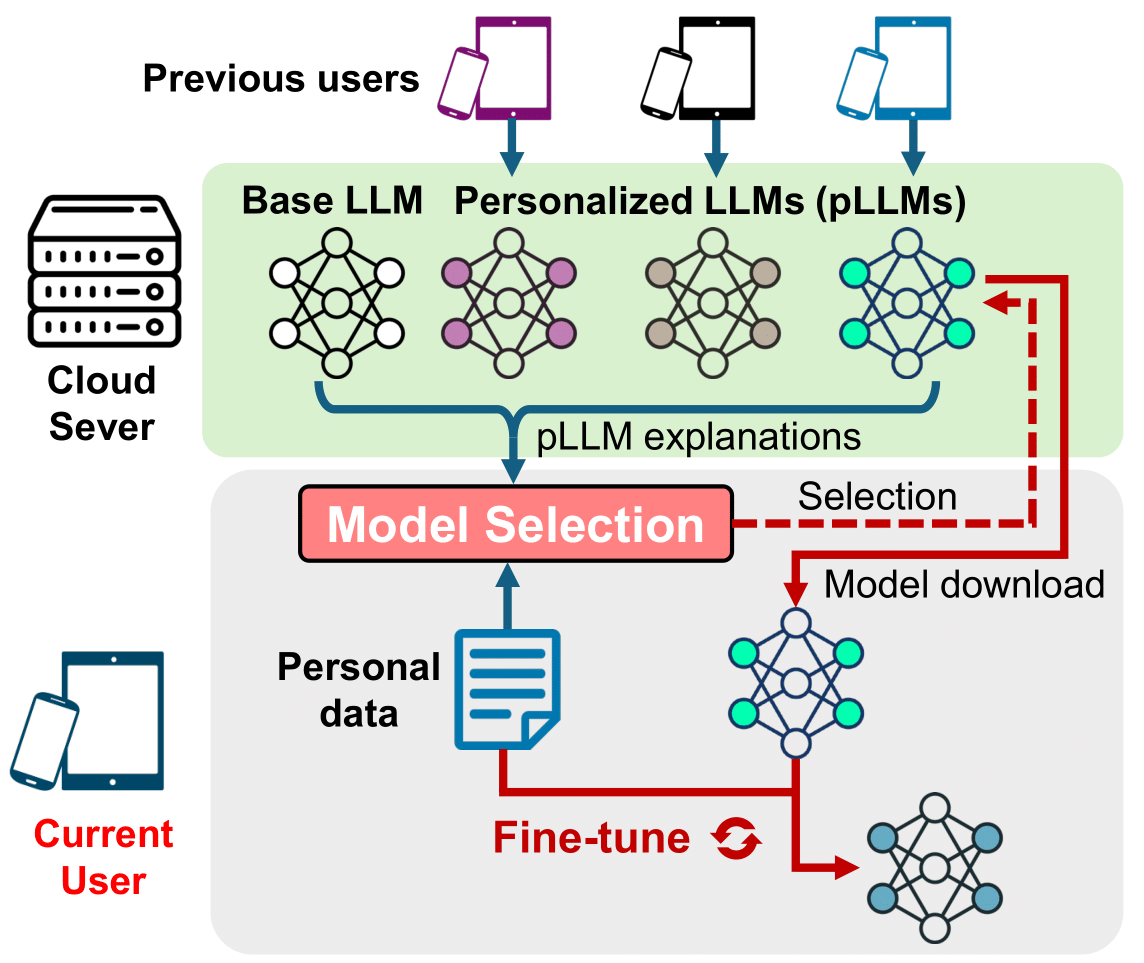

[Mobisys25] Never Start from Scratch: Expediting On-Device LLM Personalization via Explainable Model SelectionHaoming Wang, Boyuan Yang, Xiangyu yin, Wei Gao In Proceedings of the 23rd Annual International Conference on Mobile Systems, Applications and Services (Acceptance Ratio: 18.0%), 2025 paper / slides / Personalizing Large Language Models (LLMs) is crucial for meeting individual user needs on mobile devices. However, on-device personalization faces challenges from limited computational resources and scarce personal data. We propose XPerT, a technique that fine-tunes an already personalized LLM using user data and selects models based on explainability of prior fine-tuning. |

|

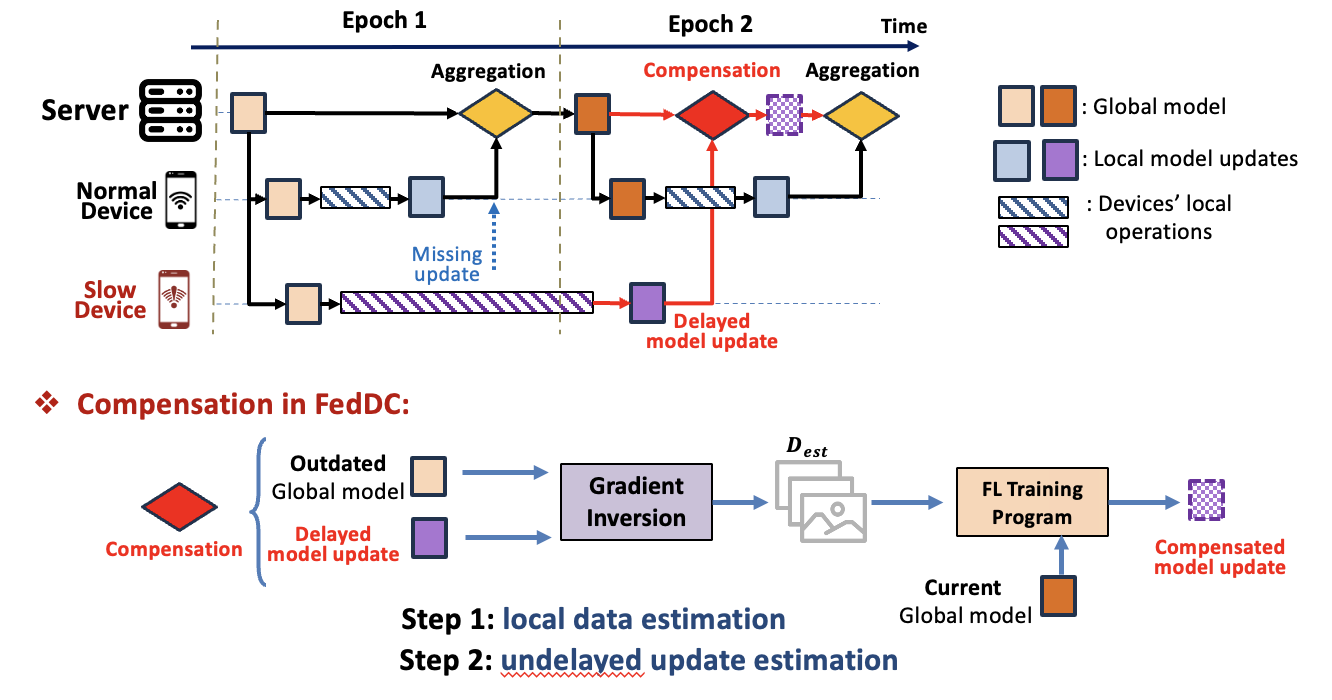

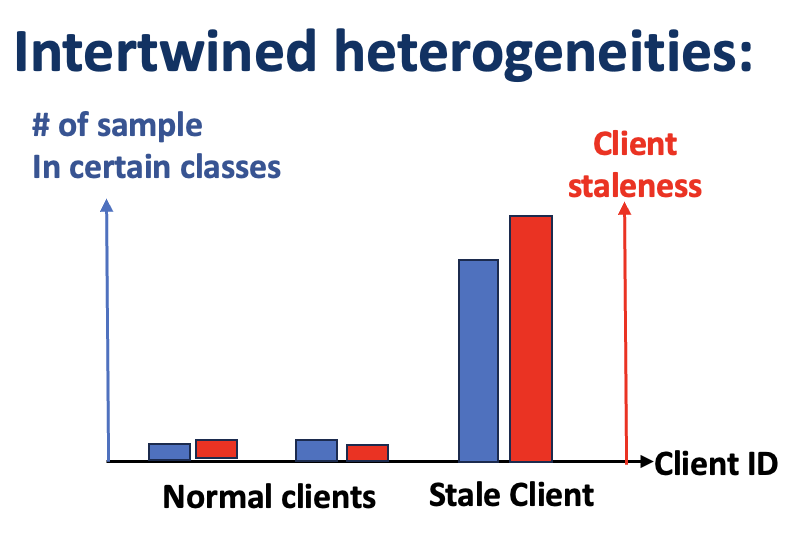

[Mobicom25] When Device Delays Meet Data Heterogeneity in Federated AIoT ApplicationsHaoming Wang, Wei Gao in Proceedings of the 31st ACM International Conference on Mobile Computing and Networking (Acceptance Ratio: 17.1%), 2025 paper / slides / Federated AIoT leverages distributed IoT data to train AI models, but heterogeneous devices introduce data heterogeneity and varying staleness, degrading model performance and slowing training. Existing FL frameworks treat device delays as independent of data heterogeneity, which is unrealistic. We propose FedDC, a technique that mitigates delay impacts when these factors are correlated. FedDC uses gradient inversion to infer local data distributions and compensate for delay-induced update bias. |

|

[AAAI25] Tackling Intertwined Data and Device Heterogeneities in Federated Learning with Unlimited StalenessHaoming Wang, Wei Gao in Proceedings of the 39th Annual Conference on Artificial Intelligence (Acceptance Ratio: 23.4%), 2025 paper / arxiv / poster / slides / Federated Learning (FL) is challenged by intertwined data and device heterogeneities—differences in clients’ local data distributions and model update staleness. Traditional FL methods treat these separately, which is unrealistic and often ineffective. We propose a novel FL framework that converts stale model updates into unstale ones, addressing these intertwined heterogeneities efficiently. Our method estimates clients’ local data distributions from their stale updates to compute unstale updates, without requiring auxiliary datasets, fully trained local models, or extra client-side computation or communication. |

Arxiv Papers

|

|

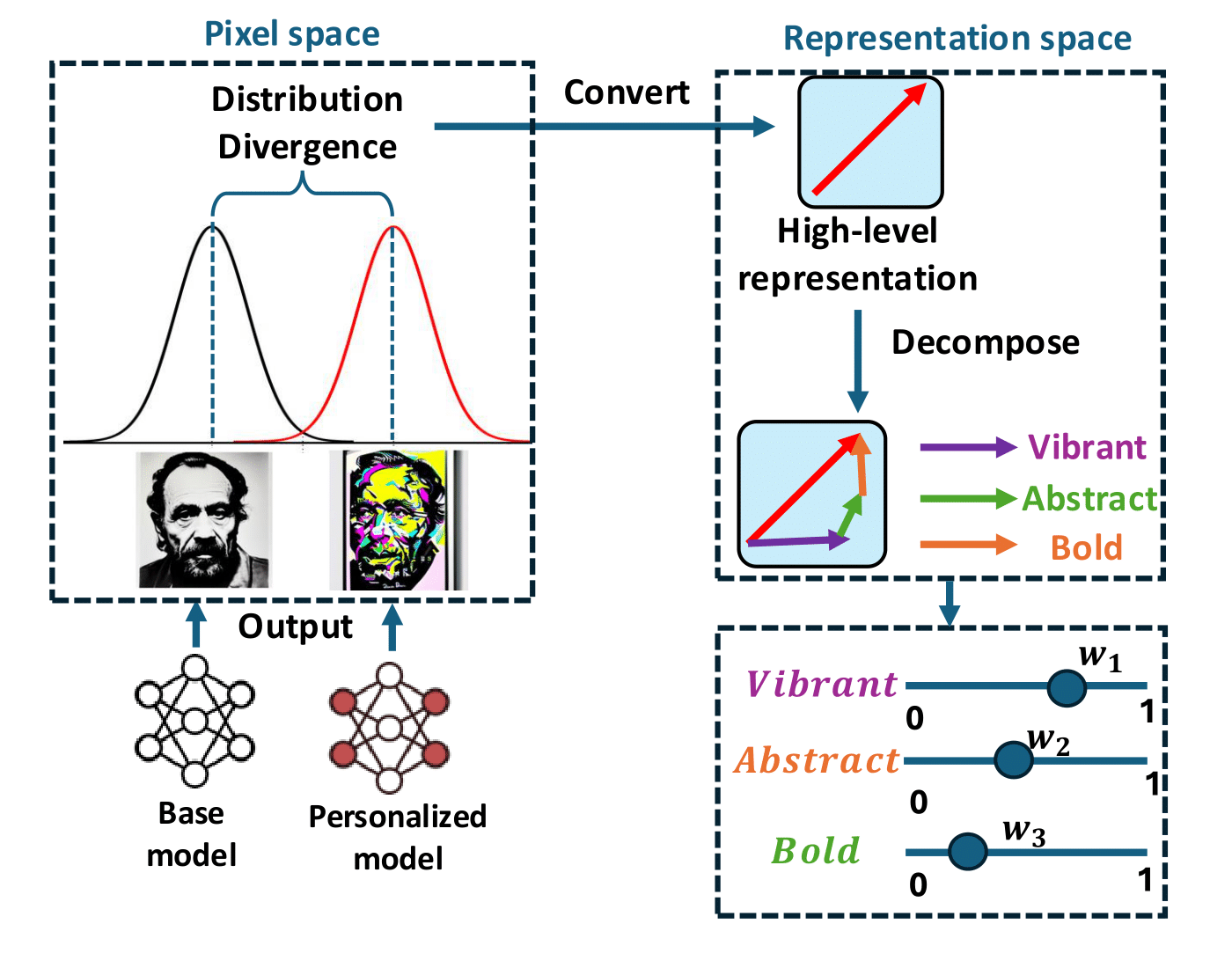

Deciphering Personalization: Towards Fine-Grained Explainability in Natural Language for Personalized Image Generation ModelsHaoming Wang, Wei Gao , 2025 paper / Personalized image generation models better serve diverse user needs but often lack explainability regarding how personalization occurs. While visual cues exist, they are hard for users to interpret, and current natural language explanations are too coarse-grained to capture multiple aspects or degrees of personalization. We propose FineXL, a technique for Fine-grained eXplainability in natural Language, which generates detailed textual descriptions and quantitative scores for each personalization aspect. |

|

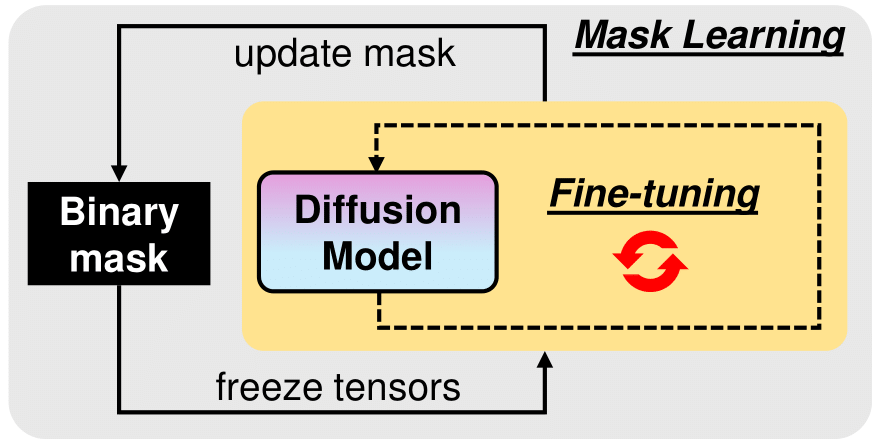

Freezeasguard: Mitigating illegal adaptation of diffusion models via selective tensor freezingKai Huang, Haoming Wang (co-author), Wei Gao , 2024 paper / Text-to-image diffusion models can be fine-tuned for personalized domains, but this adaptability also enables misuse, such as forging public figures, replicating copyrighted artworks, or producing explicit content. Existing detection or unlearning methods fail to prevent illegal adaptations. We introduce FreezeAsGuard, a technique that irreversibly mitigates such misuse by selectively freezing tensors in pre-trained diffusion models that are critical to illegal adaptations, while preserving legal fine-tuning capabilities. |

Others

|

Spatial Reasoning in Multimodal Large Language Models: A Survey of Tasks, Benchmarks and MethodsWeichen Liu, Qiyao Xue, Haoming Wang, Xiangyu Yin, Boyuan Yang, Wei Gaopaper / |